Low Power Image Recognition Challenge 2018

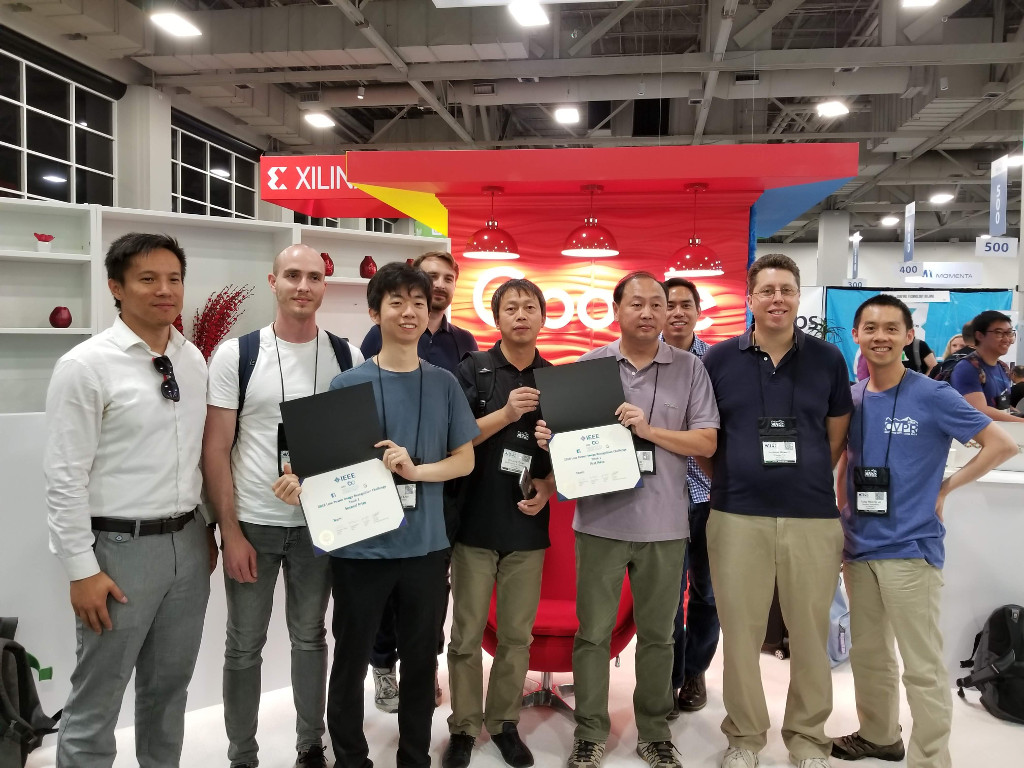

Last week (June 18-22, 2018), two members of Machine Learning team from Hyperconnect visited Computer Vision and Pattern Recognition (CVPR) conference in Salt Lake City, Utah. Prior to coming to CVPR, Machine Learning team engaged in one of the challenges called Low Power Image Recognition Challenge (LPIRC), jointly organized by Purdue University and Google.

Low-power Image Recognition Challenge 2018

For the last three years LPIRC challenge focused on inference challenges of low-powered embedded devices. This year, Hyperconnect Machine Learning team has taken part in LPIRC challenge and took the second place.

The goal of the challenge was to create Tensorflow Lite model with the best classification accuracy and speed.

The tradeoff between accuracy and speed was resolved by acc / max(30 x T, runtime), where acc is the conventionally defined accuracy and runtime is the total time (ms) to process all T images.

Every day each team could submit up to three models and each submission was evaluated on both the ImageNet validation set and a hold out test set that was freshly collected for the competition.

The result of validation dataset was known to participants shortly after submission and based on these results each team selected 3 final models that would compete with scores from the test set.

As a benchmark environment, Google Pixel 2 (using only single big core) was used.

Validation dataset contained 20 thousand images.

Benchmarking tool

At the time of challenge, Tensorflow Lite did not support per layer latency measurement, therefore we had to create our own. Benchmarking tool did not require recompilation (unlike OVIC benchmark) of Android project and summarized speed of individual layers. Week after competition, Tensorflow team released new TFLite Model Benchmark Tool that would be very helpful for challenge.

Approaches

Even though deeper models show outstanding performances, it is hard to use them directly in real world service. They usually consume too much memory, computing resources and power. Many studies[1] have been done to solve these problems, and LPIRC is also a part of it. Among several techniques, we tried the most famous methods:

- Quantization

- Low Rank Factorization

- Model Distillation

- Additional Datasets

Quantization

8-bit quantization was applied to utilize integer-only inference pipeline which is more efficient than that of floating point on mobile devices[2]. This improved inference speed a lot without a significant accuracy loss. We used TensorFlow Quantization Tool to convert floating point model to quantized model.

Low Rank Factorization

Low rank factorization, or compression, is a method to reduce the number of calculation of network while preserving the performance. For traditional convolution neural network, the most time consuming part is convolution operation (though in case of extreme classificaion last softmax layer is the bottleneck). An \(N \times N\) convolution operations are then decomposed to multiple smaller operations; \(N \times 1\) followed by \(1 \times N\), depthwise convolution followed by \(1 \times 1\) and etc. Mobilenet[3] is one example of adopting depthwise convolution and pointwise (\(1 \times 1\)) convolution as an efficient model to run on mobile devices. After factorizing large convolution units, however, \(1 \times 1\) convolution is now the most time-consuming operator, accounting for more than 90 percent of the total computation.

\(1 \times 1\) convolution can be easily factorized by singular value decomposition followed by taking the largest singular values. For \(N \times M\) convolution \((N \leq M)\), if we take less than \(\frac{N}{2}\) singular values, the total amount of computation is reduced since \(N * M = \frac{N * M + N * M}{2} \geq \frac{N * N + N * M}{2}\). Factorization is done per layer by fixing all other parameters except factorized one and doing fine-tuning to recover the performance. We observed that if quantized Mobilenet version 1 (input size \(160 \times 160\)) has 9 factorized convolution (\(1 \times 1\)) layers, inference time accelerates up to 16 % (43 ms to 36 ms in our benchmark) while losing 6 % of accuracy (67.3 to 63.59 in ImageNet validation set).

Model distillation

Model distillation techniques almost became a standard for training our deep learning models and LPIRC was not an exception. For this challenge we used one of the basic approches where we combine two training losses:

- cross-entropy loss of predictions from student model and original labels

- cross-entropy loss of student logits and soft labels (logits) from teacher model

We tested several different mixing ratios, but we did not find any significant differences in test accuracy. We also experimented with several different teacher models. At first, we selected the best performing model (PNASNet-5_Large_331) from TensorFlow-Slim pretrained models, however using such large model dramatically slowed down training process. After that, we decided to use lighter ones thanks to which we obtained better results faster.

Additional Datasets

LPIRC challenge did not pose any restrictions on datasets that can be used for training. The only requirement was to predict 1,000 classes from ImageNet. To our best knowledge, there is no other dataset with ImageNet labels, however we could pretrain our model on different dataset with different task. Google released the Open Images Dataset which is much larger than Imagenet dataset. In this experiment, we used teacher model trained on ImageNet to teach student model logits using Open Images Dataset. Training of this task was slow and because deadline was approaching fast, we paused this experiment.

Conclusion

Many applications using deep learning techniques became crucial part in our daily lives. Our team has already released image classification deep network and segmentation deep network in Azar app.

While developing the above mentioned technologies and competing in LPIRC challenge, we realized that in mobile deep vision world, there is no silver bullet. In order to deliver extremely light & fast segmentation network on mobile device, we had to incorporate several known techniques together. By just designing network in Python we can’t get a good light-weight deep neural network. For example, if you use Tensorflow Lite, you have to understand details of every operation and how well they are optimized.

At CVPR, we talked with several researchers and learned that the trend is changing fast. Our team’s next focus will be on AutoML and 1-bit quantization. Of course, our network design by human engineer is working well in Azar app, but in the future, we believe that design should be done by machine[4][5]. There are several works[6][7] utilizing 1-bit quantized neural networks that can obtain comparable results to full-precision networks. We expect deep models to become much smaller and faster than they are now. If you want to join us on our journey to solving this challenging problem, please contact our team at ml-contact@hpcnt.com!

References

[1] Y. Cheng, D. Wang, P. Zhou and T. Zhang. A Survey of Model Compression and Acceleration for Deep Neural Networks. December 13, 2017, https://arxiv.org/abs/1710.09282

[2] B. Jacob., S Kligys, B. Chen, M. Zhu, M. Tang, A. Howard, H. Adam, and D. Kalengichenko. Quantization and Training of Neural Networks for Efficient Integer-Arithmetic-Only Inference. December 15, 2017, https://arxiv.org/abs/1712.05877

[3] A. G. Howard, M. Zhu, B. Chen, D. Kalenichenko, W. Wang, T. Weyand, M. Andreetto and H. Adam. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. April 17, 2017, https://arxiv.org/abs/1704.04861

[4] T. Yang, A. Howard, B. Chen, X. Zhang, A. Go, V. Sze, H. Adam. NetAdapt: Platform-Aware Neural Network Adaptation for Mobile Applications. April 9, 2018, https://arxiv.org/abs/1804.03230

[5] Y. He, S. Han. ADC: Automated Deep Compression and Acceleration with Reinforcement Learning. February 10, 2018, https://arxiv.org/abs/1802.03494

[6] H. Bagherinezhad, M. Horton, M. Rastegari, A. Farhadi. Label Refinery: Improving ImageNet Classification through Label Progression. May 7, 2018, https://arxiv.org/abs/1805.02641

[7] S. Zhu, X. Dong, H. Su. Binary Ensemble Neural Network: More Bits per Network or More Networks per Bit?. June 20, 2018, https://arxiv.org/abs/1806.07550

Read more

-

Tips for building fast portrait segmentation network with TensorFlow Lite

Share our tips for building real-time image segmentation network in mobile device using TensorFlow Lite